Feature #2222

closedNDNLPv2 design: link service

100%

Description

Design link service API in NFD Face System.

This design shall be compatible with all NDNLPv2 features.

Initial API definition only needs to include: NACK, Fragmentation, NextHopFaceId, CachingPolicy, IncomingFaceId.

Files

Updated by Junxiao Shi over 11 years ago

Updated by Junxiao Shi over 11 years ago

- Assignee set to Junxiao Shi

I'll provide the design by Nov 26.

Implementation work may be reassigned.

Updated by Junxiao Shi over 11 years ago

Updated by Junxiao Shi over 11 years ago

Background¶

nfd::Face class is the face abstraction in NFD.

Currently, send path and receive path APIs of this class are:

class Face

{

public: // send path

virtual void

sendInterest(Interest) = 0;

virtual void

sendData(Data) = 0;

public: // receive path

EventEmitter<Interest> onReceiveInterest;

EventEmitter<Data> onReceiveData;

protected: // receive path

void

decodeAndDispatchInput(Block);

};

A concrete Face implementation:

- overrides two send methods directly

- calls non-virtual method decodeAndDispatchInput with a network-layer packet, which in turn emits a receive event

This looks like:

class SomeFace : public Face

{

public: // send path

virtual void

sendInterest(Interest interest)

{

m_socket.write(interest.wireEncode());

}

virtual void

sendData(Data data)

{

m_socket.write(data.wireEncode());

}

public: // receive path

void

receiveFromSocket()

{

Block block = m_socket.recv();

this->decodeAndDispatchInput(block);

}

private:

Socket m_socket;

};

Problem¶

Under the current API, adding a 2.5-layer feature to a face requires substantial modification in multiple places.

For example, adding packet fragmentation and reassembly needs to:

- add fragmentation logic in

sendInterestandsendData - add reassembly logic in

receiveFromSocket

The goal of the new design is to simplify the process of adding 2.5-layer features.

Design¶

class Face

{

public: // send path

virtual void

sendInterest(Interest interest)

{

this->networkSend(interest.wireEncode());

}

virtual void

sendData(Data data)

{

this->networkSend(data.wireEncode());

}

protected: // send path

virtual void

networkSend(Block block)

{

this->linkSend(block);

}

virtual void

linkSend(Block) = 0;

public: // receive path

EventEmitter<Interest> onReceiveInterest;

EventEmitter<Data> onReceiveData;

protected: // receive path

void

networkReceive(Block block)

{

// the original decodeAndDispatchInput function

}

/** \brief represents a link layer packet handler

* \param block a link layer packet

* \return whether the packet has been processed

* and shouldn't be processed by another link receive handler

*/

typedef std::function<bool(const Block& block)> LinkReceiveHandler;

/** \brief adds a link layer packet handler for a specific TLV-TYPE

*

* Link layer packets of this TLV-TYPE are passed to handlers in ascending priority,

* until a handler returns true to terminate the processing.

*/

void

addLinkReceiveHandler(uint32_t tlvType, int priority, LinkReceiveHandler handler);

void

linkReceive(Block block)

{

auto& handlers = m_linkReceiveHandlers[block.type()];

for (auto&& h : handlers) {

if (h(block)) return;

}

NFD_LOG_TRACE("unhandled link-layer packet " << block.type());

}

private: // receive path

std::unordered_map<uint32_t, std::multimap<int, LinkReceiveHandler>>

m_linkReceiveHandlers; // tlvType => priority => handler

void

initializeDefaultLinkReceiverHandlers()

{

// accept network-layer packets directly appearing at link-layer

this->addLinkReceiveHandler(tlv::Interest, 9999,

[this] (Block block) { this->networkReceive(block); return true; });

this->addLinkReceiveHandler(tlv::Data, 9999,

[this] (Block block) { this->networkReceive(block); return true; });

}

};

A simple concrete Face implementation just needs to override linkSend to send packet on the socket, and invoke linkReceive when a packet is received from the socket.

To employ packet fragmentation and reassembly, a concrete Face implementation could:

- override

networkSendto call packet fragmentation feature - provide a link receive handler for fragmented packet type

Updated by Alex Afanasyev over 11 years ago

Updated by Alex Afanasyev over 11 years ago

I don't see too much value in introducing so many redirects, especially to the base class. Link layer does not make sense for all face types.

The intention, which is invalidated by the proposed design, was to ensure that each individual face explicitly handles sent Interest and Data packets. There is some inconsistency with this intention regarding receiving packets, but it is just because this feature wasn't yet used by any faces.

I will comment more later, but "link-layer" processing can be introduced within specific face implementation without "substantial" changes.

Updated by Junxiao Shi over 11 years ago

Updated by Junxiao Shi over 11 years ago

What about implementing note-2 design as nfd::LayeredFace, a subclass of nfd::Face?

Concrete face implementations, such as EthernetFace, could derive from LayeredFace.

Updated by Davide Pesavento over 11 years ago

Updated by Davide Pesavento over 11 years ago

Junxiao Shi wrote:

What about implementing note-2 design as

nfd::LayeredFace, a subclass ofnfd::Face?Concrete face implementations, such as

EthernetFace, could derive fromLayeredFace.

This is exactly what I (very briefly) proposed to Alex in a F2F meeting in early September, as part of the design for the V2V wifi face.

Updated by Alex Afanasyev over 11 years ago

Updated by Alex Afanasyev over 11 years ago

Here is my suggestion for the design. The intention is to separate "2.5-layer" into separate abstraction that can be plugged between NDN side and link-layer side of the Face.

class FaceWithTwoPointFiveLayer : public Face

{

public: // send path

virtual void

sendInterest(const Interest& interest)

{

m_twoPointFiveLayer->send(interest.wireEncode());

}

virtual void

sendData(const Data& data)

{

m_twoPointFiveLayer->send(data.wireEncode());

}

// private: // in specific Face implementations

// void

// send(const Block& dataBlock)

// {

// // ... send to link-laye...

// ...

// }

// void

// send(const std::vector<Block>& dataBlocks)

// {

// // (optional)

// // ... send to link-layer (with scatter-gather)...

// ...

// }

// void

// receive(const Block& dataBlock)

// {

// // ... receive from link-layer ...

// m_twoPointFiveLayer->receive(dataBlock);

// }

public: // receive path

EventEmitter<Interest> onReceiveInterest;

EventEmitter<Data> onReceiveData;

private:

unique_ptr<TwoPointFiveLayer> m_twoPointFiveLayer;

};

//////////////////////////////////////////////////////

class TwoPointFiveLayer

{

public:

TwoPointFiveLayer(EventEmitter<Interest>& onReceiveInterest,

EventEmitter<Data>& onReceiveData,

std::function<void (const Block&)> sendBlock)

: onReceiveInterest(onReceiveInterest)

, onReceiveData(onReceiveData)

, sendBlock(sendBlock)

{

}

virtual void

send(const Block& block) = 0;

virtual void

receive(const Block& block) = 0;

protected:

EventEmitter<Interest>& m_onReceiveInterest;

EventEmitter<Data>& m_onReceiveData;

std::function<void (const Block&)> m_sendBlock;

};

class PassThroughLayer : public TwoPointFiveLayer

{

public:

/**

* @param onReceiveInterest "callback" to dispatch received Interest to the network layer and up

* @param onReceiveData "callback" to dispatch received Data to the network layer and up

* @param sendBlock callback to send data block over link layer

*/

PassThroughLayer(EventEmitter<Interest>& onReceiveInterest,

EventEmitter<Data>& onReceiveData,

std::function<void (const Block&)> sendBlock)

: TwoPointFiveLayer(onReceiveInterest, onReceiveData, sendBlock)

{

}

virtual void

send(const Block& block)

{

sendBlock(block);

}

virtual void

receive(const Block& block)

{

try {

if (element.type() == tlv::Interest)

{

shared_ptr<Interest> i = make_shared<Interest>();

i->wireDecode(element);

this->onReceiveInterest(*i);

}

else if (element.type() == tlv::Data)

{

shared_ptr<Data> d = make_shared<Data>();

d->wireDecode(element);

this->onReceiveData(*d);

}

else

return false;

return true;

}

catch (tlv::Error&) {

return false;

}

}

};

Updated by Davide Pesavento over 11 years ago

Updated by Davide Pesavento over 11 years ago

I will discuss Alex's design proposal with Giulio to see if it can work for the vehicular face as well.

Meanwhile, I think PassThroughLayer is not needed. TwoPointFiveLayer can be a concrete class instead of abstract, providing default "passthrough" implementations for send and receive that subclasses can call in their overrides.

Updated by Junxiao Shi over 11 years ago

Updated by Junxiao Shi over 11 years ago

I disagree with the design in note-6, for the following reasons:

- The separation of link layer and network layer is a norm, rather than an exception. The only faces with only network layer and no link layer are: InternalFace (for management), and DummyFace (for testing). All other faces have a link layer.

- Compared to note-2 design, note-6 design does not simplify the implementation of a face with multiple L2.5 features.

On the send path, both designs require a function to be modified:

Face::linkSendin note-2, andTwoPointFiveLayer::sendin note-6.

I know there's some problems in note-2 design, most prominently:

- The receive path is asymmetric with the send path: receive path has table-based dispatch, but send path doesn't have it. I plan to drop table-based dispatch in the receive path, in favor of conditional statements.

I intend to revise note-2 design into the final design.

If note-6 design has advantages over note-2, please state what are the advantages, and I'll try to match them in my next design.

Updated by Alex Afanasyev over 11 years ago

Updated by Alex Afanasyev over 11 years ago

- The separation of link layer and network layer is a norm, rather than an exception. The only faces with only network layer and no link layer are: InternalFace (for management), and DummyFace (for testing). All other faces have a link layer.

I cannot disagree with this statement, however it is irrelevant to either of the proposed designs. My objective is to have a design where something can be easily shuffled inside the face, tunneling network-link and link-network layer operations. It is not obvious and not clear without separate abstraction.

- Compared to note-2 design, note-6 design does not simplify the implementation of a face with multiple L2.5 features. On the send path, both designs require a function to be modified:

Face::linkSendin note-2, andTwoPointFiveLayer::sendin note-6.

This is not true. There is a big difference in requiring modification of the face or requiring modification of TwoPointFiveLayer.

I know there's some problems in note-2 design, most prominently:

- The receive path is asymmetric with the send path: the receive path has a table-based dispatch, but the send path doesn't have it. I plan to drop table-based dispatch in the receive path, in favor of conditional statements.

Please state requirements that 2.5-layer need to support, otherwise I don't even understand this "problem". I kind of vaguely understood what could be receive-path issue, but don't see how those issues related to send path.

Updated by Junxiao Shi over 11 years ago

Updated by Junxiao Shi over 11 years ago

- Description updated (diff)

The concept of "2.5-layer" is not clearly defined. I have updated issue description to say "link layer".

My objective is to have a design where something can be easily shuffled inside the face, tunneling network-link and link-network layer operations.

It is not obvious and not clear without separate abstraction.

Both NDNLP fragmentation and BFD are on link layer, not between network-link.

A nfd::Face has three layers:

- network layer: Interest, Data, Nack, etc

- link layer: NDNLP, BFD, etc; network layer packet MAY directly appear on link layer on some kind of faces

- socket layer: TCP/UNIX stream, UDP datagram, Ethernet frame, etc

note-2 design allows either layer to be replaced:

| layer | send path | receive path |

|---|---|---|

| network | sendInterest sendData |

networkReceive |

| link | networkSend |

link receive handlers |

| socket | linkSend |

caller of linkReceive |

(I know function names looks out of place, but they can be revised).

note-6 design puts network and socket layer in one place, and pulls link layer in another place.

This is very confusing.

There is a big difference in requiring modification of the face or requiring modification of TwoPointFiveLayer.

Putting all three layers in one class allows inter-layer design and optimization.

The alternate, having one abstraction per layer, enforces strict boundary of layer separation, and at the same time takes away the opportunity of inter-layer optimization.

The set of network layer, link layer, and socket layer services is what defines the characteristics of a face.

Having all layers in the same class allows easy communication between layers.

For example, duplicate suppression (#1282, a network layer feature) is only needed when the face communicates with a multicast group (information available at socket layer).

I know there's some problems in note-2 design, most prominently: The receive path is asymmetric with the send path: the receive path has a table-based dispatch, but the send path doesn't have it. I plan to drop table-based dispatch in the receive path, in favor of conditional statements.

In note-2 design, the receive path of link layer is more complex than send path. I plan to use a series of if or switch statements to invoke link layer features, instead of using a table-based dispatch.

Updated by Junxiao Shi about 11 years ago

Updated by Junxiao Shi about 11 years ago

At 20141205 technical issue discussion, Van said: there should be only one link layer header, in which all relevant fields are included. We shouldn't overly enforce layering and end up with fragmentation header + BFD header + other link layer headers.

Thus, I need to rethink the design of this part.

Updated by Junxiao Shi about 11 years ago

Updated by Junxiao Shi about 11 years ago

- Blocked by Feature #2520: NDNLPv2 high-level design added

Updated by Davide Pesavento almost 11 years ago

Updated by Davide Pesavento almost 11 years ago

- Target version changed from v0.3 to v0.4

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Blocked by deleted (Feature #2520: NDNLPv2 high-level design)

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Related to Task #2763: NDNLPv2: NACK, Fragmentation, NextHopFaceId, CachePolicy, IncomingFaceId added

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Subject changed from Face: link layer send and dispatch to NDNLPv2 design: link adaptation layer

- Description updated (diff)

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

- Related to Feature #1218: V2V LinkService added

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

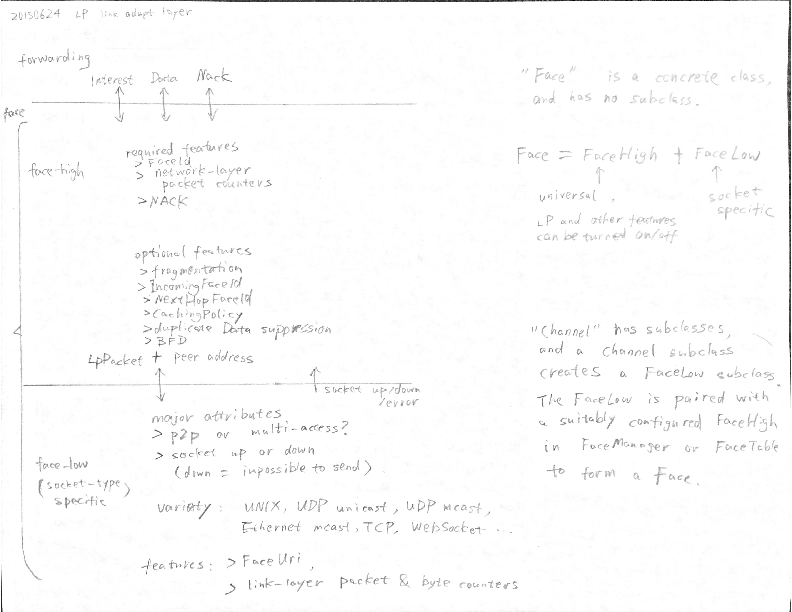

- File LAL_20150624.png LAL_20150624.png added

- Status changed from New to In Progress

My high level idea is to split a Face into FaceHigh and FaceLow, where FaceHigh is universal and implements all LP and other features, and FaceLow is specific to each socket type. The interface between FaceHigh and FaceLow is defined in terms of NDNLP packets.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150624 conference call discussed note-18 design.

It's clarified that:

FaceLowdoes not equalTransport. It's merely a wrapper over a socket where LpPackets can be immediately sent and received.- A

FaceLowsubclass may expose additional functions for fine-tuning, such as adjusting a socket option. - There is only one

FaceHigh. Its behavior (what features are enabled) can be set using flags.

We concluded that:

- The necessity of having a boundary between

FaceHighandFaceLowneeds additional justification. The assignee should provide a comparison between how LP looks like with or without the boundary, to show the benefit of having this boundary. - The current implementation has the equivalent of

FaceHighinFacebase class, and have the equivalent ofFaceLowsubclasses inFacesubclasses. PullingFaceHighfunctionality out fromFaceclass to a separateFaceHighclass, in order to allow "replacingFaceHighwith an alternate implementation", is probably overly modular, and would require a major change in face system. The assignee should provide additional evidence to show the benefit.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- File LAL_20150628.pptx LAL_20150628.pptx added

- % Done changed from 0 to 10

I've made the high level design.

The slides contain partial answer to note-19 questions.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

At 20150710 conference call, @Beichuan requests that a class definition should be written to accompany note-20 design.

I'll try to do this before 20150715 conference call.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Headers for face system: https://gist.github.com/yoursunny/22a7d53711b4e9bc198a

revision 94c7466e5a6a1d2fe834f8e63d617f34100e5922 only declares Face Lal Link base classes.

More details about GenericLal and examples for concrete Links will be added later.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

why aren't you pushing the headers to gerrit?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Answer to note-23:

These headers are design, not implementation.

They are not real code, but partially pseudocode.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

Headers for face system: https://gist.github.com/yoursunny/22a7d53711b4e9bc198a

The face system is not currently in the nfd::face namespace (only nfd), is it a typo or intentional? Unless you have a good reason, I would advise against excessive namespacing in an application. It clutters the code without any real benefits.

The enum class FaceStatus should be FaceState. It should also include an UNKNOWN value.

In struct LinkPacket, it's more common to distinguish between a "source" and a "destination" address, not "local" and "remote".

Get rid of generic_lal namespace. Options can be nested in class GenericLal.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Reply to note-25:

These are valid points that I will consider.

I'm currently more interested in opinions on a higher level of design: the division between Lal and Link, and the interface between these two defined around LinkPacket.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

- Related to deleted (Feature #1218: V2V LinkService)

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

- Blocks Feature #1218: V2V LinkService added

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Yes I forgot the most important comment.

I'm generally ok with the approach so far. My only concern is that now each face is composed of 3 different layers: this has some benefits in terms of modularity, code reuse and clear separation of concerns, but also introduces more virtual calls in the send path and more signal emissions in the receive path (both performance sensitive). I'm wondering if the composition of Face+Lal+Link can be done statically through the use of some template magic.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Reply to note-29:

I disagree with using templates to replace virtual calls and signals.

ndnSIM 1.0 ContentStore used that approach, and the result is ugly and unreadable code.

There's no benchmark result that proves the usage of virtual calls and signals is a major overhead.

Instead, benchmark has shown that the overhead is in tables and crypto operations, not virtual calls or signals.

Updated by Alex Afanasyev over 10 years ago

Updated by Alex Afanasyev over 10 years ago

How are you planning to "fail" the face from the Link abstraction (e.g., when socket dies)?

With Ethernet face as an example. Right now, it obtains MTU size to initialize "slicer". The slicer now becomes part of a "LAL". How it can be initialized?

Is the initialization logic for Ethernet face be moved completely outside Face hierarchy?LinkPacket structure. I don't quite see how we could generalize NetworkAddress and I see it right now as an unnecessary per-packet overhead. In my opinion, the information about network addresses should exist and be obtainable only when needed.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Alex Afanasyev wrote:

I still think we are moving towards an extreme level of modularity that we don't really need and which can hit us with performance penalties.

This statement needs more explanation.

- Modularity is a design goal of NFD.

- Modularity of this design is justified in note-20 attachment.

- Performance penalty is no more than a few virtual calls, which is insignificant compared to table lookups.

However, I'm ok overall with the proposed separation of function.

Thanks.

One thing that I'm kind of concerned is introduction of new terminology: "LAL" (which took me some time to realize that it is LinkAdaptationLayer". I would rather just use LinkAdaptor or just Adaptor (other abstractions don't have word "Layer" in them).

Probably yes.

How are you planning to "fail" the face from the Link abstraction (e.g., when socket dies)?

This is an unsolved question in note-22 design.

The design covers both LAL and permface, and it's not yet decided how to recover from a socket failure. Points to consider: TCP reconnect, up/down, re-run tunnel authentication procedure upon reconnect.

With Ethernet face as an example. Right now, it obtains MTU size to initialize "slicer". The slicer now becomes part of a "LAL". How it can be initialized?

The general idea is: Link shall tell LAL the MTU.

- For Ethernet, MTU is fixed and can be exposed as an attribute getter.

- For UDP over IPv6, LAL needs to perform PMTU discovery, which would involve a negative feedback to LAL when

Linkreceives the ICMPv6 error. - UDP over IPv4 can either go with 65535 octets minus UDP header, or use PMTU.

Is the initialization logic for Ethernet face be moved completely outside Face hierarchy?

Ideally it shouldn't.

I think we still would need a concept of a concrete faces (UdpFace, TcpFace, etc.), otherwise the important face creation/initialization logic would be scattered around and may be separated from implementation.

No, there's no UdpFace etc because it only defines half of the face type. The other half is the choice of LAL.

Face will be a non-inheritable final class.

Initialization logic should stay in face system (ie. not in management), and may appear in link, channel, and protocol factory; existing code already has some logic in channel and protocol factory.

It's preferred to have socket creation in Link because re-creating sockets may be necessary for permanent faces.

LinkPacket structure. I don't quite see how we could generalize NetworkAddress and I see it right now as an unnecessary per-packet overhead. In my opinion, the information about network addresses should exist and be obtainable only when needed.

Both ns-3 and .Net Framework have general NetworkAddress struct; sockaddr_storage is another choice.

NetworkAddress is tagged per packet, because potentially every packet can come from a different peer (multi-access link), and be received at a different local address (eg. an L2 interface with multiple unicast and multicast addresses). It cannot be obtained from the Link independent from the packet. But it can be stored in a format efficient for the Link, and converted only when requested.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150717 conference call discussed the answers in note-32.

"PMTU discovery" is unnecessary in NFD, because it never works well.

Instead, we can have Link supply an initial MTU, and (in code) allow this MTU to change during runtime.

It doesn't forbid PMTU discovery in the future, but does not require either LAL or Link to perform PMTU discovery now.

Initial MTU is read from physical interface configuration for Ethernet link, and assumed to be 65535 minus UDP header for UDP link.

Runtime MTU change is necessary even without PMTU discovery.

The physical interface MTU can be changed using ip link command in linux.

For UDP face, if physical MTU is a concern, we could provide a management command or configuration option to update the MTU used in LAL.

Runtime MTU change does not make LAL processing more complicated: the only places affected by MTU are fragmentation and piggyback.

Given MTU change is a rare event, it's unnecessary for LAL to do anything beyond starting limiting new LpPackets to the new MTU.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

One thing that I'm kind of concerned is introduction of new terminology: "LAL" (which took me some time to realize that it is LinkAdaptationLayer". I would rather just use LinkAdaptor or just Adaptor (other abstractions don't have word "Layer" in them).

I discussed this with Beichuan on 20150720.

We decide to rename:

Lal=>AdaptorLink=>Socket, to avoid ambiguity with Link objected used in mobility and routing scalabilityLinkPacket=>Socket::Packet

Design should also avoid the term "layer" because this term is ambiguous.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

Lal=>Adaptor

adaptor/adapter are also commonly used to indicate network cards (ethernet or wifi), so I'm not sure if this is a good name.

Design should also avoid the term "layer" because this term is ambiguous.

Why is it ambiguous?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150724 conference call decides to use the following class names:

LinkService

GenericLinkService

Socket

Socket::Packet

Updated by Alex Afanasyev over 10 years ago

Updated by Alex Afanasyev over 10 years ago

I like LinkService (I'm guesing it is what used to be called Adaptor?), but I do not like Socket. Socket is a term that is heavily used in IP world and have a specific meaning, which is completely different how we are planning to use it...

Unfortunately, I don't have a good suggestion to make...

Updated by Anonymous over 10 years ago

Updated by Anonymous over 10 years ago

Not sure if this steers you one way or another, but Chronosync uses the term Socket.

Updated by Alex Afanasyev over 10 years ago

Updated by Alex Afanasyev over 10 years ago

ChronoSync's socket was an attemt to create analog of BSD socket, so it doesn't conflict with the common definition.

Per my understanding, the proposed socket is to refer to low-lever transport mechsnics of a sprcific protocol (ethernet, udp, tcp, websocket).

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Subject changed from NDNLPv2 design: link adaptation layer to NDNLPv2 design: link service

- % Done changed from 10 to 30

revision 4f80ace18b8e5c934da8ed25709213f5c35de5ba fulfills the renaming in note-36, and adds a few comments about how GenericLinkService should be implemented to support fragmentation+reassembly, Nack, IncomingFaceId, NextHopFaceId, CachePolicy.

I don't plan to design in more detail at this stage, but I can fix problems in this high-level design.

Design of fragmentation+reassembly and encoding/decoding procedures are up to implementer.

This issue will remain open until all planned NDNLPv2 features are incorporated in GenericLinkService.

To avoid blocking implementation issues, they should be created as "related to" rather than "blocked by" this issue.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Related to Task #3088: Refactor Face as LinkService+Transport added

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Reply to note-39:

The semantics of Socket is neither "BSD socket" nor "low-level transport".

A Socket can send or receive TLV blocks, with no guaranteed delivery and no guaranteed order.

Maybe it should be called Transport, similar to ndn-cxx Transport?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150814 conference call approves structural design in note-40.

Socket terminology is still pending further discussion.

Transport may be confused with OSI transport layer.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

I'm uploading the design slides (obsoleting LAL_20150628.pptx) to reflect the LinkService terminology.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

What is a

NetworkAddress?

This has been partially answered in note-32. I'll add the design intention.

NetworkAddress is a data structure that represents an underlying address.

It also has a special value ANY.

The exact value of NetworkAddress is unimportant because it's neither used by LinkService nor used by forwarding.

Equality of NetworkAddress is important for reassembly on a multi-access link, and for #2108.

For incoming packets:

- (point-to-point link)

remoteAddrof all incoming packets should compare equal. - (multi-access link)

remoteAddrof two incoming packets should compare equal if they are transmitted by the same peer, and unequal if they are transmitted by different peers. localAddrindicates the address of local endpoint. It should not equal anyremoteAddr.- A link may have multiple distinct

localAddrs if it binds to multiple local addresses.

For outgoing packets:

- (point-to-point link)

remoteAddris ignored. - (multi-access link) Setting

remoteAddrto ANY causes the packet to be multicast. - (multi-access link) Setting

remoteAddrto theremoteAddrof a peer causes the packet to be unicast toward a specific peer, if the link is capable of handling both unicast and multicast. localAddrspecifies the preference among multiple local addresses.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

I think using the term "socket" carries a very high potential for confusion. In my mind "socket" has a very specific meaning (a mechanism and abstraction to access some facilities provided by the OS) and I've never seen any other software overload that meaning with something different. A class named Socket would be fine if it was just a wrapper of a BSD socket, but it seems to be more than that according to the current proposal. On a more practical level, it would also create confusion between Asio's sockets and "our" sockets when talking about the implementation.

"Transport", on the other hand, is more flexible and I've seen several libraries use the term "transport" to indicate an abstraction of the underlying mechanism to transport messages between two endpoints. So if the choice is between "socket" and "transport", I definitely prefer the latter.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

For outgoing packets:

[...]

- (multi-access link) Setting

remoteAddrto ANY causes the packet to be multicast.

What if the link has joined multiple multicast groups?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Answer to note-47:

A link shouldn't join multiple multicast groups. Construct a separate link for each multicast group.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Well I'm confused by what you mean by "link" now... Without a previous definition, I assumed you meant roughly the equivalent of a linux network interface, i.e. the output of ip link, or the NFD abstraction of it.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150825 conference call decides to rename Socket to Transport. I'll update design documents.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Design is updated to fulfill note-50.

https://gist.github.com/yoursunny/22a7d53711b4e9bc198a/26c216b4eb2519edad4e989cbb2b53b727416f97

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Related to Feature #3173: Developer Guide: Face=LinkService+Transport and GenericLinkService added

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision 95d76904f3e8a4028e38356b41ec4b391838c55f adds a .close() method to Face and Transport classes. FaceState enum is extended to have CLOSED,FAILED states.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision a7024bd991a591b861a27f337b3bd498aad332bf changes Face constructor to accept unique_ptr<LinkService>, unique_ptr<Transport> rather than LinkService&, Transport&, so that the Face owns a LinkService and a Transport.

Otherwise, it would be unclear who is responsible for keeping these objects alive.

unique_ptr is chosen over shared_ptr because LinkService and Transport are part of a Face, and cannot be shared.

If need arises, I can provide getters that returns a mutable reference for these objects.

Also, I'm adding the FaceScope static attribute, a public setter for FacePersistency, and protected setters for other static attributes in Transport so that subclass can initialize them without having to use a long parameter list on the constructor.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision b0d570b1fae1f7b02ae675a2a1cd3ed488f911cd:

- changes

NetworkAddresstoEndpointIdas decided in #3179 note-8 - deletes

Transport::Packet::localAddrfield, because there's a dispute, and I don't have a good argument for its necessity at this moment - moves

FaceIdintoTransport, addsFaceLogHelpertemplate, so thatNFD_LOG_FACEmacro can be used inFace Transport LinkService

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Idea for another change:

Transport::setLinkService(LinkService&): this gives aLinkServicereference toTransport, so that receiving a packet could be a function call rather than a signal.LinkService::setFace(Face&)andTransport::setFace(Face&): this allows moving the FaceId back toFace, which is more logically correct than note-55's decision of putting it intoTransportin order to support logging.- All these are invoked in

Faceconstructor.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision 9683f54e68351b9761f22eb1883f76e8ab9485b1 fulfills note-56.

This one is a larger design change that requires an approval before implementation.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

20150910 conference call approves note-57 design.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision 192a8dcc1ba4b487c17c400ac1ecf62f620d28aa changes the visibility of Transport::doClose and Transport::beforeChangePersistency from private to protected.

The change for Transport::doClose is necessary because MulticastUdpTransport::doClose needs to invoke DatagramTransport::doClose (#3168 note-23).

The change for Transport::beforeChangePersistency is reasonable because when a transport inherit from another, both transports should be able to get notified upon a change of persistency setting.

Transport::doSend remains private because I can't imagine a valid scenario where a transport needs to invoke doSend on its base class.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

- Description updated (diff)

why packets are sometimes passed by

const&and sometimes by&&

There are two kinds of packets at Face LinkService Transport API:

- network-layer packets (

Interest Data Nack) are passed by const l-value reference.- send path: the packet is controlled by and retained in forwarding, so it cannot be moved into face system

- receive path: the packet is passed to forwarding via a

ndn::util::Signal, which does not support r-value reference

- NDNLP packets (contained in

Transport::Packet) are passed by r-value reference.- Both send path and receive path use function calls, so r-value can be used.

- r-value is chosen over l-value to reduce the small overhead of copying

Blockstruct.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

- network-layer packets (

Interest Data Nack) are passed by const l-value reference.

- send path: the packet is controlled by and retained in forwarding, so it cannot be moved into face system

So the LinkService has to copy the network-layer packet to create an NDNLP packet, right?

* receive path: the packet is passed to forwarding via a `ndn::util::Signal`, which does not support r-value reference

Fair enough. So who owns the packet before it is passed to forwarding?

- NDNLP packets (contained in

Transport::Packet) are passed by r-value reference.

- Both send path and receive path use function calls, so r-value can be used.

- r-value is chosen over l-value to reduce the small overhead of copying

Blockstruct.

Absolutely. So Transport::Packets are always moved up and down the chain via std::move and rvalue references. Sounds good, except that I don't understand the ownership here... for example, how do we guarantee that the buffer supplied to asio's async_send is kept alive until the completion handler is invoked?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Answer to note-61:

Encoding a network-layer packet into LpPackets always involves copying, because additional headers are prepended.

Sending bare network-layer packets onto the wire is a special case, which isn't worth an optimization at the cost of API complications, unless its benefit is proven by a benchmark in the future.

At receive path, LinkService owns the network-layer packet before it's passed to forwarding.

Transport::Packet is owned by either LinkService or Transport.

During an async_send, one trick is to bind a copy of the ConstBufferPtr (or the Block which contains the ConstBufferPtr) onto the send completion callback, so that the underlying Buffer is kept alive.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

Encoding a network-layer packet into LpPackets always involves copying, because additional headers are prepended.

Not entirely true. If it's just prepending/appending additional stuff (without touching the existing buffer), then Boost.Asio supports scatter/gather in send/receive, and we can "easily" use that mechanism to avoid copying.

Sending bare network-layer packets onto the wire is a special case, which isn't worth an optimization at the cost of API complications, unless its benefit is proven by a benchmark in the future.

Absolutely. I'm not objecting to this approach, I asked about the copy just to check if I understood correctly.

At receive path, LinkService owns the network-layer packet before it's passed to forwarding.

Transport::Packetis owned by either LinkService or Transport.

During anasync_send, one trick is to bind a copy of theConstBufferPtr(or theBlockwhich contains theConstBufferPtr) onto the send completion callback, so that the underlyingBufferis kept alive.

Yeah I know this trick. So there is actually a point in time when the packet/buffer is owned by Asio via the bound completion handler. This is a valid solution, because the boost doc says that the handler will be "copied as required".

The important thing is that the Block passed to std::bind is either copied or moved (Block is movable so std::bind should be able to avoid the copy). In other words, std::ref and std::cref MUST NOT be used in this case.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Another "lifetime" question: since the transport is owned by LpFace, and destroyed at the same time (it's a unique_ptr), how can the transport defer its own destruction until after all pending Asio callbacks have been invoked?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Answer to note-64:

I didn't think about this question in the original design.

My current idea is:

- For a normal close (usually from a FaceMgmt command), invoking

Transport::closerequests the transport to close itself, after which the state changes toCLOSING. TheFaceis destroyed only afterTransportcompletes cleanup procedure and changes the state toCLOSED. - For a abnormal failure (socket error on non-permanent face),

Transportshould not change state toFAILEDuntil cleanup procedure is complete.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

- For a normal close (usually from a FaceMgmt command), invoking

Transport::closerequests the transport to close itself, after which the state changes toCLOSING. TheFaceis destroyed only afterTransportcompletes cleanup procedure and changes the state toCLOSED.

Yep, this was my idea too.

- For a abnormal failure (socket error on non-permanent face),

Transportshould not change state toFAILEDuntil cleanup procedure is complete.

I don't see why you want to handle this differently. I would extend the state machine in this case as well. Do we need a different "transient" state or can we use the same as the normal close case? Probably we need two different states, otherwise the transport won't be able to know which state it has to move to at the end of the cleanup (the code path is the same).

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Reply to note-66:

I think it's better to have CLOSED as the only final state, which indicates the Face can be deallocated.

All possible transitions are:

- UP=>DOWN=>UP, link failure and recovery on permanent face

- UP=>FAILED=>CLOSED, abnormal failure on non-permanent face

- UP=>CLOSING=>CLOSED, DOWN=>CLOSING=>CLOSED, normal close (by management command)

Calling Face::close has no effect if the state is already FAILED.

I'd also rename the FaceState enum to TransportState, and then typedef TransportState FaceState.

In the future, LinkService could introduce more states such as UNAUTHENTICATED (for tunnel authentication #1285), but those additions should only appear in FaceState and should not affect TransportState.

Updated by Davide Pesavento over 10 years ago

Updated by Davide Pesavento over 10 years ago

Junxiao Shi wrote:

I think it's better to have CLOSED as the only final state, which indicates the

Facecan be deallocated.All possible transitions are:

- UP=>DOWN=>UP, link failure and recovery on permanent face

- UP=>FAILED=>CLOSED, abnormal failure on non-permanent face

- UP=>CLOSING=>CLOSED, DOWN=>CLOSING=>CLOSED, normal close (by management command)

OK

Calling

Face::closehas no effect if the state is alreadyFAILED.

Face::close is meaningful only if the face is UP or DOWN I think. It should be a no-op in all of the remaining cases.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision 9a85d9f89c690280dc6b104a4e249a4fde2f9a57 fulfills note-67.

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

revision 7d98aa83d8e682d26ef16fdaeba7ab1d5f81830a adds Transport::getMtu (#3253, reached consensus in #3170 note-16) and counters (#3177, needs design review).

Updated by Eric Newberry over 10 years ago

Updated by Eric Newberry over 10 years ago

Why are there two classes named TransportCounters in the spec?

Updated by Junxiao Shi over 10 years ago

Updated by Junxiao Shi over 10 years ago

Why are there two classes named

TransportCounters?

Obviously that's a typo.

Fixed in revision 653021672f7f7a58e3a5aec1bf365d4f99319ed3.

Updated by Junxiao Shi about 10 years ago

Updated by Junxiao Shi about 10 years ago

- Target version changed from v0.4 to v0.5

This issue will remain open until all planned NDNLPv2 features are incorporated in

GenericLinkService.

Updated by Junxiao Shi about 10 years ago

Updated by Junxiao Shi about 10 years ago

- Status changed from In Progress to Closed

- Target version changed from v0.5 to v0.4

- % Done changed from 50 to 100

This is closed together with #2520.